- Documentation

- Video Call

- Upgrade using advanced features

- Distincitve features

- Customize the video and audio

- Customize how the video captures

Customize how the video captures

Introduction

When the ZEGOCLOUD SDK's default video capture module cannot meet your application's requirement, the SDK allows you to customize the video capture process. By enabling custom video capture, you can manage the video capture on your own and send the captured video data to the SDK for the subsequent video encoding and stream publishing. With custom video capture enabled, you can still call the SDK's API to render the video for local preview, which means you don't have to implement the rendering yourself.

Listed below are some scenarios where enabling custom video capture is recommended:

- Your application needs to use a third-party beauty SDK. In such cases, You can perform video capture, and video preprocessing using the beauty SDK and then pass the preprocessed video data to the ZEGOCLOUD SDK for the subsequent video encoding and stream publishing.

- Your application needs to perform another task that also needs to use the camera during the live streaming, which will cause a conflict with the ZEGOCLOUD SDK's default video capturing module. For example, it needs to record a short video clip during live streaming.

- Your application needs to live stream with video data captured from a non-camera video source, such as a video file, a screen to be shared, or live video game content.

Prerequisites

Before you begin, make sure you complete the following:

Create a project in ZEGOCLOUD Admin Console and get the AppID and AppSign of your project.

Refer to the Quick Start doc to complete the SDK integration and basic function implementation.

Implementation process

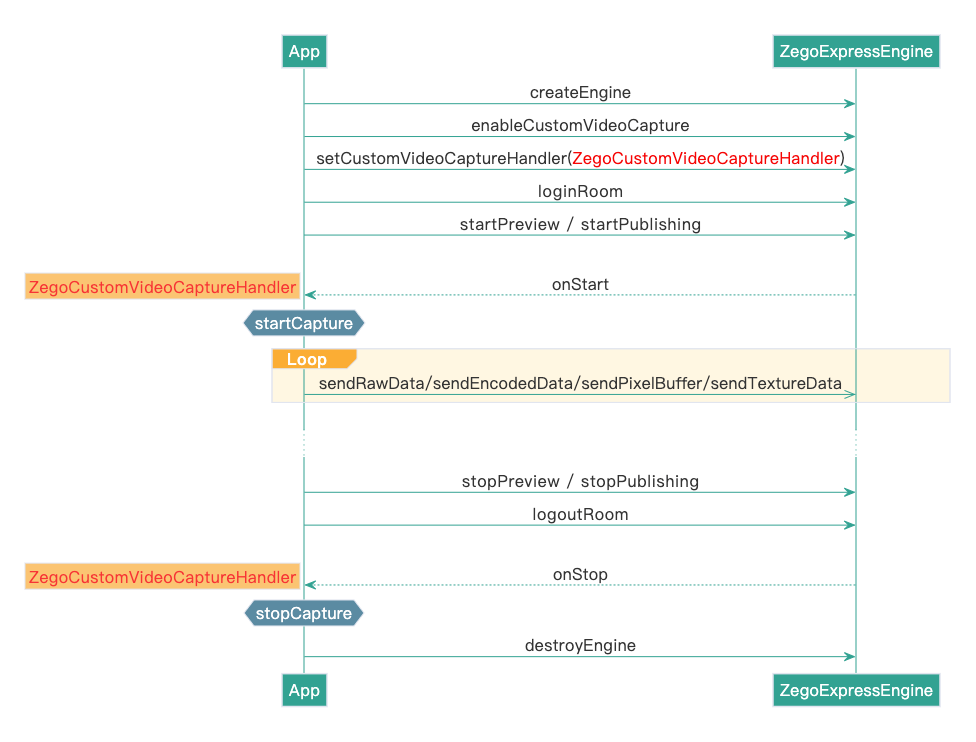

The process of custom video capture is as follows:

- Create a

ZegoExpressEngineinstance. - Enable custom video capture.

- Set up the event handler for custom video capture callbacks.

- Log in to the room and start publishing the stream, and the callback

onStartwill be triggered. - On receiving the callback "onStart", start sending video frame data to the SDK.

- When the stream publishing stops, the callback "onStop" will be triggered. On receiving this callback, stop the video capture.

Refer to the API call sequence diagram below to implement custom video capture in your project:

)

To enable the custom video capture, you will need to set the enablecamera method to True (default settings); Otherwise, there will be no video data when you publishing streams.

Enable custom video capture

To enable the custom video capture feature, do the following:

- Call the

ZegoCustomVideoCaptureConfigmethod to create a custom video capture object. - Set the

bufferTypeproperty to specify the data type to be used to send captured video frame data to the SDK. - Call the

enableCustomVideoCapturemethod to enable the custom video capture feature.

The SDK supports multiple video frame types (bufferType) for Android. You will need to pass the data types to the SDK. Currently, the following are supported: The raw data type RAW_DATA, the GLTEXTURE2D type GL_TEXTURE_2D, and the encoding type ENCODED_DATA. Setting other unsupported values cannot provide frame data to the SDK.

ZegoCustomVideoCaptureConfig videoCaptureConfig = new ZegoCustomVideoCaptureConfig();

// Set the data type of the captured video frame to RAW_DATA.

videoCaptureConfig.bufferType = ZegoVideoBufferType.RAW_DATA;

engine.enableCustomVideoCapture(true, videoCaptureConfig, ZegoPublishChannel.MAIN);Set up the custom video capture callback

To receive callback notifications related to custom video capture, call the setCustomVideoCaptureHandler to set up an event handler (an object of the IZegoCustomVideoCaptureHandler class) to listen for and handle the callbacks related to custom video capture: onStart and onStop.

When customizing the capturing of multiple streams, you will need to specify the stream-publishing channel throught the callback onStart, and onStop. Otherwise, only the main channel's event callbacks will be notified by default.

// Set the engine itself as the callback handler object.

sdk.setCustomVideoCaptureHandler(new IZegoCustomVideoCaptureHandler() {

@Override

public void onStart(ZegoPublishChannel channel) {

// On receiving the onStart callback, you can execute the tasks to start up your customized video capture process (e.g., turning on the camera) and start sending video frame data to the SDK.

...

}

@Override

public void onStop(ZegoPublishChannel channel) {

// On receiving the onStop callback, you can execute the tasks to stop your customized video capture process (e.g., turning off the camera) and stop sending video frame data to the SDK.

...

}

});Send the captured video frame data to the SDK

When you call startPreview to start the local preview or call startPublishingStream to start the stream publishing, the callback onStart will be triggered.

On receiving this callback, you can start the video capture process and then call the responding method to send the captured video frame data to the SDK according to the table below:

sendCustomVideoCaptureRawData or sendCustomVideoCaptureTextureData to send the captured video frame data to the SDK.

| Video frame type | bufferType | Method to send captured frame data |

|---|---|---|

| Raw data type | RAW_DATA | sendCustomVideoCaptureRawData |

| GLTexture2D type | GL_TEXTURE_2D | sendCustomVideoCaptureTextureData |

| Encoding type | ENCODED_DATA | sendCustomVideoCaptureEncodedData |

During the video capturing process, if you sent the encoded video frame data via the sendCustomVideoCaptureEncodedData method to the SDK, the SDK will not be able to preview. That is, at this point, you will need to set up the preview yourself.

See below an example of sending the captured video frame data in RAW_DATA format to the SDK.

// Send the captured video frame in raw data to ZEGO SDK

if (byteBuffer == null) {

byteBuffer = ByteBuffer.allocateDirect(data.length);

}

byteBuffer.put(data);

byteBuffer.flip();

mSDKEngine.sendCustomVideoCaptureRawData(byteBuffer, data.length, param, now);When both the stream publishing and local preview are stopped, the callback onStop will be triggered. On receiving this callback, you can stop the video capture process, for example, turn off the camera.

Optional: Set the transfer matrix for captured images

To set the transfer matrix to rorate, zoom the captured images after receiving the onStart callback, call the setCustomVideoCaptureTransformMatrix method.

For more details about the matrix parameter, see

SurfaceTexture.getTransformMatrix.

- This feature only takes effect when the

bufferTypeis set toTexture2D. - Developers with OpenGL experience are recommended to use this feature.

Optional: Set the state of the capturing device

To set the state of the custom capturing devices after receiving the onStart callback, call the setCustomVideoCaptureDeviceState method as needed.

For the stream player to obtain the state of the capturing device, listen for the onRemoteCameraStateUpdate callback.

If the stream publisher sets the device state to ZegoRemoteDeviceState.DISABLE or ZegoRemoteDeviceState.MUTE by calling the setCustomVideoCaptureDeviceState method, then the stream player can't receive event notification through the callback onRemoteCameraStateUpdate.

When the stream publisher turns off the camera with the enableCamera method, the stream player can recognize the device state ZegoRemoteDeviceStateDisable through the onRemoteCameraStateUpdate callback.

When the stream publisher stops publishing video streams with the mutePublishStreamVideo method, the stream player can recognize the device state ZegoRemoteDeviceStateMute through the onRemoteCameraStateUpdate callback.

FAQs

- How to use the "OpenGL Texture 2D" data type to transfer the captured video data?

Set the bufferType attribute of the ZegoCustomVideoCaptureConfig object to GL_TEXTURE_2D, and then call the sendCustomVideoCaptureTextureData method to send the captured video data.

- With custom video capture enabled, the local preview is working fine, but the remote viewers see distorted video images. How to solve the problem?

That is because the aspect ratio of the captured video is different from the aspect ratio of the SDK's default encoding resolution. For instance, if the aspect ratio of the captured video is 4:3, but the aspect ratio of the SDK's default encoding resolution is 16:9, you can solve the problem using either one of the following solutions:

- Option 1: Change the video capture aspect ratio to 16:9.

- Option 2: Call setVideoConfig to set the SDK's video encoding resolution to a resolution aspect ratio 4:3.

- Option 3:Call setCustomVideoCaptureFillMode to set the video fill mode to "ASPECT_FIT" (as a result, the video will have black padding areas) or "ASPECT_FILL" (as a result, part of the video image will be cropped out).

- After the custom video capture is enabled, the video playback frame rate is not the same as the video capture frame rate. How to solve the problem?

Call setVideoConfig to set the frame rate to be the same as the video capture frame rate (i.e., the frequency of calling sendCustomVideoCaptureRawData or sendCustomVideoCaptureTextureData)

- Does the SDK process the received video frame data synchronously or asynchronously?

When the SDK receives the video frame data, it will first copy the data synchronously and then perform encoding and other operations asynchronously. The captured video frame data can be released once they are passed into the SDK.

- How to implement video rotation during the custom video capture?

During the Custom video capturing, when the device direction changes, you can switch the screen between portrait and landscape orientation by the following two methods:

- Process the video frame data by yourself: In the callback for device direction changing, rotate the captured video frame data, and then pass the processed data to the SDK by calling the

sendCustomVideoCaptureTextureDatamethod. - Process the video frame data with the SDK: In the callback for device direction changing, set the

rotationproperty of theZegoVideoEncodedFrameParammethod based on the actual situation. And then call thesendCustomVideoCaptureEncodedDatamethod with the video frame data and rotation parameters, and send the processed data to the SDK.